Introduction

Multivariate data analysis via chemometric techniques are what allow us to take the NIR spectra and translate it into useful information for process monitoring and control. The success of your chemometric technique is dependent on calibrating it with an appropriate dataset of representative samples and corresponding reference data. However, generating and characterizing all those calibration samples is expensive to complete, a concept referred to as calibration burden.

Definition of Calibration Burden

The calibration burden describes the summation of the time, materials, and financial requirements for calibrating a chemometric technique. This concept can be expanded to consider the needs of subject matter expertise or recurring method maintenance costs. The material and financial costs of calibration burden are particularly concerning during early drug development, when limited quantities of API are available and it is prohibitively expensive to synthesize more. Calibration burden provides a useful framework for evaluating and comparing the potential investment between different chemometric techniques for your NIR spectroscopy PAT application.

Calculating the Calibration Burden

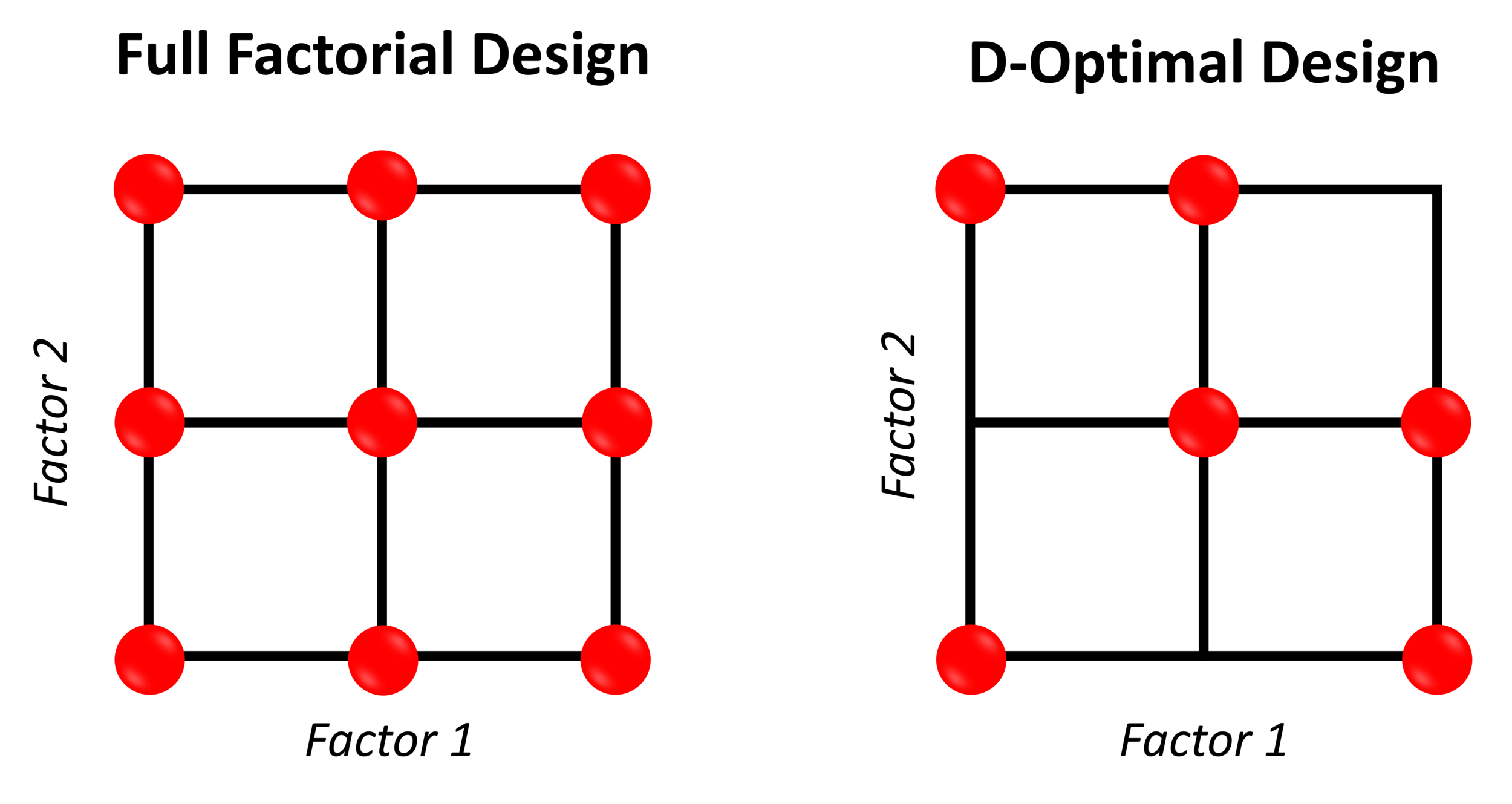

A simplified way of calculating calibration burden of a particular chemometric technique is the number of representative samples required in the calibration design. For example, constructing a PLS regression model using a full factorial (FF) design is more burdensome than one constructed using a D-optimal design. The figure below the difference in the number of required representative samples between the FF and D-optimal designs for two factors, three level designs.

Breaking it down further to consider the specific time, material, and financial aspects of sample generation, imagine developing a PLS regression model using each calibration design for monitoring a pharmaceutical powder mixture during bin blending (in a 50 L pilot scale blender) using a SentroPAT DA. The hypothetical pharmaceutical powder blend will contain an API at a concentration of 25 %w.w and two excipients, and our calibration design will vary the API potency and ratio of the excipients at three levels each. Each calculation will assume two replicates per calibration design point. The cost for each aspect of calibration burden between the two designs are listed below:

- Time: Assuming 15 minutes between weighing and blending for sample generation, the time cost for the FF design is 4.5 hours and for the D-optimal design is 3 hours

- Material: Assuming a 20 kg material requirement for our pilot scale blender, the material cost for the FF design is 360 kg and for the D-optimal design is 240 kg

- Financial: Setting the cost of API to between $10 USD to $10,000 USD per kg and cost of each excipient at $2.50 USD per kg, the financial costs of materials ranges for the FF design from $1,575 USD (low end) to approximately $901K USD (high end) and for the D-optimal design from $1,050 USD (low end) to $600K USD (high end)

Calibration Burden during PAT Development

The impact and limitations calibration burden of chemometric techniques are major consideration for PAT practitioners during method development. This drives a desire to use strategies and techniques to reduce the calibration burden. Selecting an efficient calibration design, such as D-optimal over FF, is one such strategy. An alternative approach is to utilize lean chemometric techniques over the conventional choice of PLS, but we will have to save that discussion for next time!